It is always fun to get back on site after a couple of days off work. SharePoint 2010 is like an annoying little critter, if you’re not there to cuddle with it it will do the most strange things.

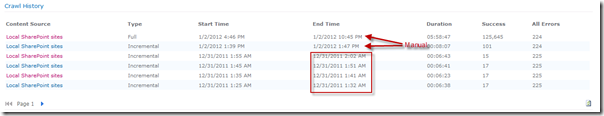

I currently have a support case open regarding some issues with crawled properties (I hope that will be another story to tell another day) and went into the Search Service Application admin pages in Central Admin to check some things. When poking around I noticed that the incremental crawl hasn’t been run for a few days - actually it stopped working on the 31st of December last year (sounds like ages ago now :-). In this farm we have three Search Service Applications and only this one hadn’t been incrementally crawled - the other two worked just fine. I fired up an incremental crawl manually and that worked, waited for the next incremental crawl to start - and it didn’t. Also tried a full crawl manually - which worked fine, but the scheduled crawls never started.

I searched through the trace logs and event logs and found nothing that I didn’t expect to see.

I tried to Synchronize() the Search Service Instances - which I’ve previously done when similar stuff happened by running some PowerShell snippets on the servers in the farm. Nothing happened!

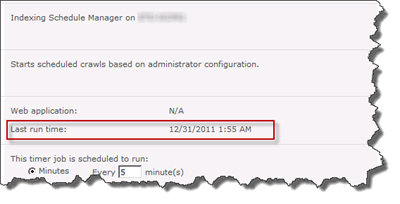

Ok, what is actually triggering the incremental crawls then? It is triggered by timer jobs called Indexing Schedule Manager on XXX, and there is one job per server. In this case we have two servers running search and I took a look at them and noticed that one of these jobs hadn’t been run since the last scheduled crawl - while the other one had been running all the time (every 5 minutes).

I tried starting the timer job both through the Central Admin web UI and through PowerShell -but it just refused to start. Now this is strange! Now I took a look at the Timer Job History on this specific server and noticed that no timer jobs had been executed on that specific machine since that time when the scheduled crawl last was executed. Thankfully the trace logs were there and I could see that just after 2:00 AM on the 31st of December the Timer Service stopped working (no references to owstimer.exe at all since then). There’s no warning or error or nothing that indicates that it would have stopped working (and the sptimerv4 service was still running on the machine). The only thing in there was a warning about a Forefront conflict. Nevertheless I needed to get it working and currently don’t have time to spend on why this happened (if it happens again, then let’s dig deeper).

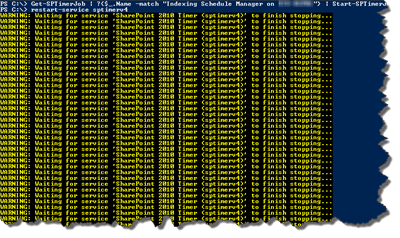

I tried to do a restart-service sptimerv4 on the failing machine, without luck, it couldn’t be gracefully shut down. So I actually had to kill the owstimer.exe process and then start the timer service.

Once the timer service was killed and started everything spun up and the incremental crawled kicked in after a couple of minutes and have been working perfect since then.

Interesting thing here was that I did not see any early warning signs, errors in the logs or similar, and the only way we found out that the Timer process had gone into a stale state was that the scheduled crawl for ONE of the Search Service Applications was not running.